What Makes Building Safe AI So Hard?

Creating a traditional computer program involves a fixed sequence of steps. First, we need to understand the problem and translate it into formal logic. Then, we implement that in code. Often surprisingly, the implementation of a program’s logic is comparatively easy. The challenging part is understanding and translating the problem.

The more complex systems become, the more brainpower we spend to understand them. It becomes necessary to distill the problem and its logic into components, which are easier to work with. Given an input to a component, we know which output to expect. Even before writing a single line of code, we formalize these expectations in contracts. These contracts describe how various components interact with each other. And ultimately, how the overall system interacts with the real world. Once the software is written, we can ensure that the contracts hold. This process makes the concept of failure transparent and clearly defined.

This way, we have managed to build extremely safe software systems that can operate in real-world environments. For example, software autopilots for airplanes, which have been in use for half a century1, don’t fail even once in a billion flight hours. To put this in perspective, think about flying a Boeing 747 around Earth 22 million times2. We don’t expect it to fail once in that time! Flying is the safest mode of transport3 for a good reason. It is designed with failure in mind.

The New Era of Machine Learning

But things have changed over the past decade. More recently, the idea of letting a computer learn program behavior with data has heralded a new era of machine learning. It is now one of the most powerful tools of our age.

Machine learning can leverage statistical dependencies in data to learn complex input-output mappings. The program behavior is deduced from data. Therefore, we no longer need to distill the logic and implement it in code. Instead, the computer does so from data. We no longer have to translate the problem into formal logic. Instead, the computer does so from data. To some extent, we don’t even have to understand the problem anymore – or at least we are fooled into believing that we don’t.

Through machine learning, a computer can learn behaviors that are much more complex than anything humans can describe with hand-written rules. On well-designed datasets, or sometimes even in well-controlled environments, we can now do things that we couldn’t even imagine with traditional software.

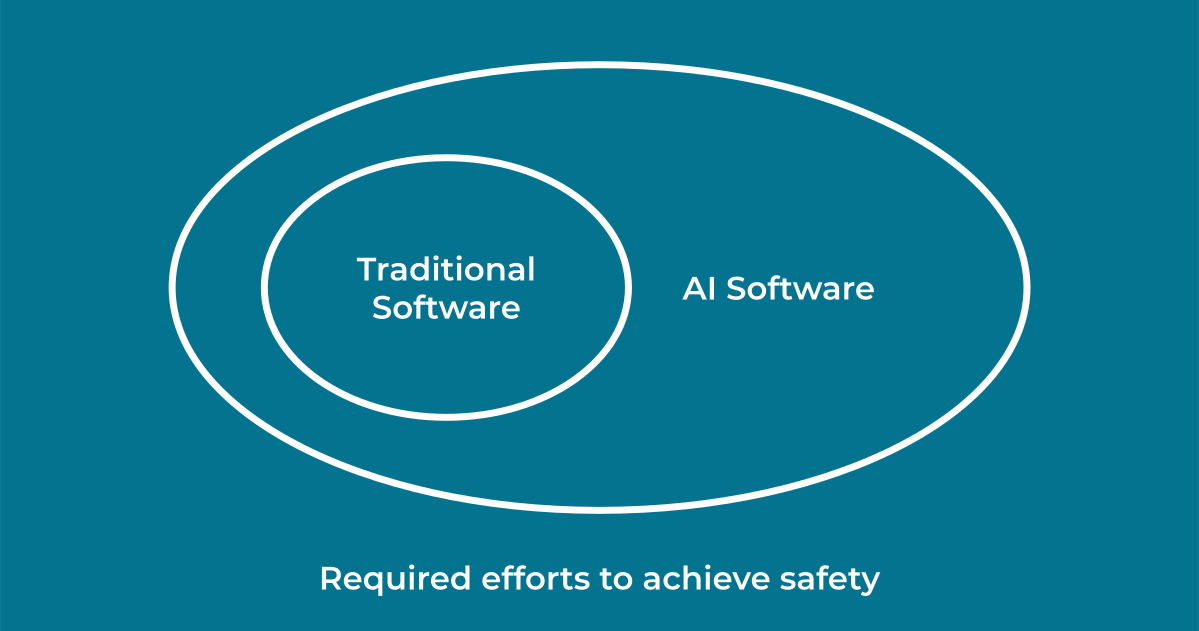

AI systems have all of the developmental and operational problems of traditional code, plus a vast additional set of AI-specific issues. AI software typically operates with more uncertainty, and it is challenging to demonstrate its safety and compliance.

Traditionally, we left the difficult parts – like understanding and translating the problem – to humans. We explicitly defined the behavior of traditional programs. Now machine learning solves this for us. It automatically distills the problem and its assumptions into a model. As a result, we can learn much more complicated program behaviors. But, at the same time, the understanding and behavioral guarantees of traditional software are lost. The contracts, which we previously relied on, no longer exist. This makes it much more difficult to analyze AI behavior, let alone to demonstrate its safety.

A New Mindset for AI Development

AI systems have the same developmental and operational problems as traditional software, plus a vast set of AI-specific issues. As a result, companies can create impressive demos but fail to translate them into real products . Or even worse, they operate AI software with a lot more uncertainty. This has already led to catastrophic consequences.

Regulatory organizations, industry-specific working groups, and researchers are investigating how existing safety standards can be extended to cover the additional challenges of AI software. With decades of experience certifying traditional software, they know that safety follows from adopting a system-level view. Moreover, it requires a mindset that holds all stages of the development process accountable, from requirements collection to end-user interaction.

AI development teams must adopt a similar top-down approach. Crucially, this means a development workflow that looks beyond the model. We need to systematically combine the best practices from traditional software with the tools specific to AI. Only then can we reestablish the contracts that traditional software relies on, reduce the uncertainty around AI applications, and achieve the failure rates that mission-critical applications require.

With quickly evolving regulatory standards and improving machine learning tools, we now have a chance to get this right. Both sides of the table, regulators and industry, need to work together to create frameworks that support innovation, not stifle it.

Thanks to Matthias , Mateo , Rui , Anna , Alex and Ina for reading and commenting on drafts of this article.

-

Human-Centered Aviation<br> Automation: Principles and Guidelines (Charles E. Billings, February 1996) ↩︎

-

Assuming that planet Earth has a circumference of roughly 40km, and a Boeing 747 has a cruise speed of around 900km/h, it takes us 45 hours to get around once, and so we need to repeat that roughly 22 million times to be airborne for a billion hours. ↩︎

-

IATA Economics' Chart of the Week (IATA, 23 February 2018) ↩︎